RESEARCH

> AI + 3D Audio

AI + 3D Audio

Intelligent Audio System with Environment Awareness

Most audio systems we use today passively reproduce audio contents irrespective of room characteristics and listening conditions. To realize a truly intelligent and adaptive audio rendering system, we aim to solve the following research questions:

- What if an audio system can recognize the surrounding environment and adapt to its characteristics?

- What if the sound properties of such an environment can be identified by noises of everyday life?

- How can we train the audio system to understand the "environment" and "user behavior"?

- How can we extract spatial cues in 3D audio scenes and adapt them to the identified environment?

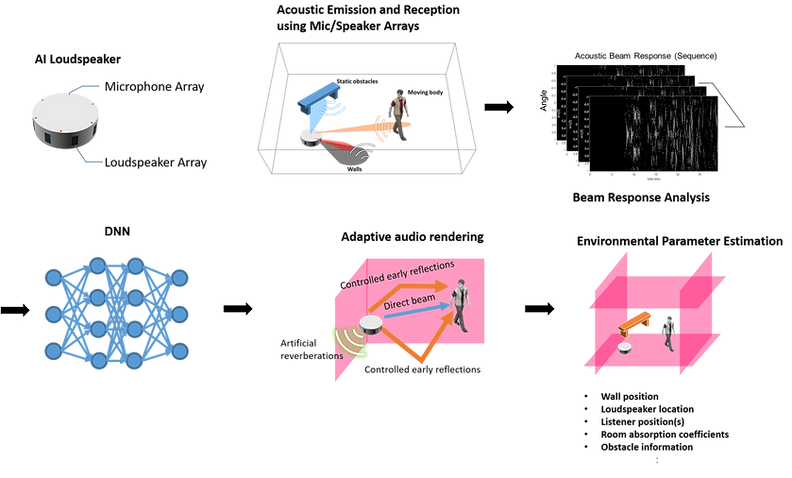

Microphone and loudspeaker arrays installed in the conventional AI loudspeaker can record various spatial information from the audio played by the system itself, as well as from various environmental noises. In this work, we explore the possibility to build a DNN architecture that can extract environmental information from multichannel array signals and spatial information from the 3D audio signals. The extracted information and original 3D audio data are used to recreate the audio scene mostly suits the given playback environment.

Adaptation of 3D audio contents: de-reverberation example

This research is being supported by the National Research Foundation of Korea (NRF) grant funded by the Korea government

(MSIT)